Before Google attempts to rank websites or webpages in response to a search query, it must first fully understand the intent behind the query.

The algorithm used by Google to perform this task is called BERT. It was released by the Google AI Language lab in the form of a paper which described how it uses neural network to assist search engines to decipher context and intent by analyzing the words surrounding the main entity in a sentence or chunk of text.

How does BERT work ?

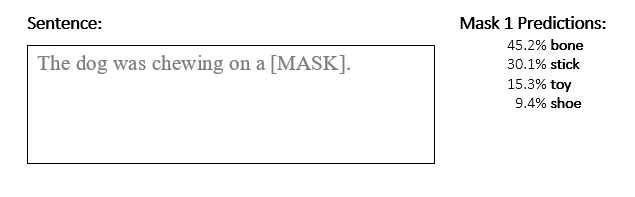

BERT essentially carries out two autonomous tasks as part of its pre-training stage. The first task is called Masked Language Modelling (MLM) which iteratively masks out tokens and uses the context of the words surrounding the masked token (referred to as [mask] in Figure 1) in order to predict what that masked token might be.

Figure 1: Example of Masked Language Modelling

The second task that BERT uses to train its model is called Next Sentence Prediction (NSP). As you might have guessed by the name, the core purpose of this task is to process a sentence and predict what the following sentence might look like. For example, given the input sentence “I like dogs” (referred to as sentence A in their paper) BERT will calculate the probability that this sentence is followed by the sentence “Do you like them/dogs/animals?” or a sentence of similar intent (sentence B). This mountainous task has been achieved with an accuracy of 50%, where sentence B has been detected in half of all tested cases. Although BERT seems to be the leader in this task, an accuracy of 50% means that BERT also gets it wrong half the time.

Following the pre-training phase, the BERT model is then fine-tuned by applying the BERT algorithm to datasets containing labelled data in the form of sentence pairs (pairs of the sentences A and B), depending on which part of the model is being fine-tuned.

Now that we have a basic understand of how the BERT algorithm is trained and optimised, let’s take a quick look at what problems it can solved for Google’s search engine. First and foremost, these algorithms have improved the way Google can translate chunks of text and sentences from search queries (and beyond). This is because using MLM and NSP (on a token and sentence level), BERT is able to resolve common problems in machine comprehension of languages such as: understanding the meaning of homonyms (words with multiple meanings), resolving cataphora and anaphora (words which refer to previously mentioned entities) and resolving lexical ambiguities (words that represent verbs, nouns or adjectives depending on the context in which they are being used).

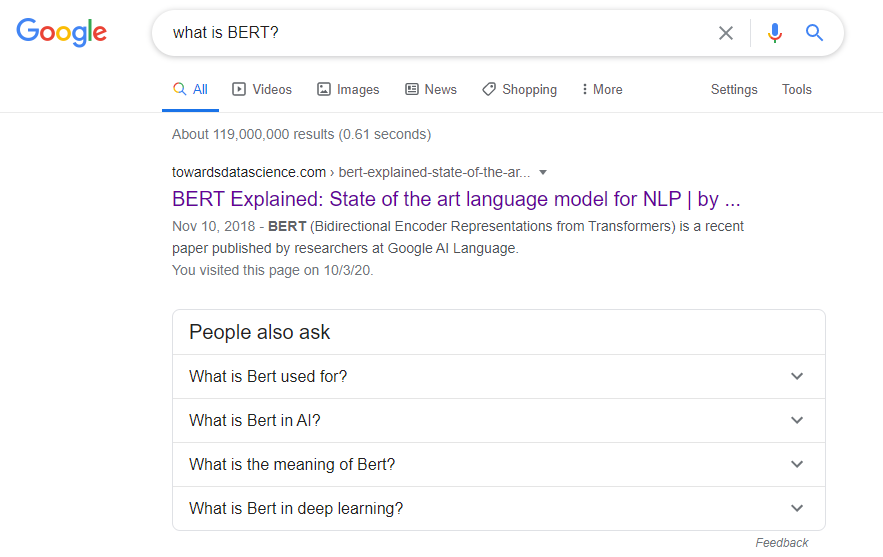

BERT has also significantly improved the understanding of question intent. This functionality is currently used in Google’s search engine where answers to search queries are provided directly in the results (as shown in Figure 2). This feature has been optimised for the English language, however BERT’s language comprehension capabilities mean that we should soon expect to see this functionality for other languages in the very near future.

Figure 2: Answers to search queries in result section on Google.com

Optimizing your SEO strategy for BERT

BERT’s algorithms have been coded fully in Python and you may have a look at the code on GitHub. For those of us who are less inclined to comb through lines of code, I shall discuss some simple ways to leverage BERT to improve your SEO strategy.

Believe it or not, Google has released a statement saying that it is impossible to optimize for BERT, since BERT presents an optimal aid to deciphering user intent and content intent/quality. Google claims that it is therefore able to rank content appropriately in response to a Google search query. This is a rich statement, especially since in terms of NSP, BERT has only been able to predict subsequent sentences in 50% of cases, meaning that it has also been wrong half of the time. This implies that we can expect an update to BERT’s NSP capabilities in the near future. With this in mind, we should not completely overhaul our SEO strategy to optimise for BERT, as any drastic changes to our strategy may need to be revisited once this happens. Notwithstanding this, we must keep in mind that BERT is intended to mimic a human’s capacity for language understanding by using a set of (highly complex) algorithmic rules.

All in all, there is no secret recipe or one-size-fits-all formula for optimizing your content for such algorithms. Notwithstanding this, since BERT is a deep learning tool which aims at understanding what users want, then by optimizing our content for our target audience we will also be optimizing it for BERT’s algorithms. Here are some of the best ways that you can use to optimize content for BERT/your users:

1. Forget about algorithms and bots?

Since BERT is aimed at understanding the intent behind the query of every user, the best way to optimize for it is to provide unique and valuable content. Your content must answer the user’s queries and must contain information that they are looking for. If your content has the answer to the query the user is searching for then BERT should be able to bring it up for the user. The basics of SEO will remain the same, you will now have to be more careful about the information that is being presented in your content. It seems this is the only change that BERT has introduced into how SEO works. In addition to following all the best practices in SEO your content will have to deliver valuable content as well to get a good ranking. Although not entirely accurate, BERT’s NSP will now know what an answer to a query should look like (in terms of language structure). Therefore, if the answer to a user’s query is not found on your website, then you will rank lowly or not at all. Simple.

2. Focus on what your niche market is looking for!

At a very low-level, Google’s search algorithms will make use of MLM and will therefore play a “fill-in-the-blanks” game in response to a user’s query. This means that when creating content for our websites we must tap into the mindset of our desired website visitors and create umbrella content that generally addresses what they might be looking for online as well as related topics that fall under this umbrella. “Umbrella” content is referred to as pillar content, whilst a group of related content articles is referred to as a topic cluster. Using this approach to address Google rankings is a topic within itself, however many SEO experts undertake a somewhat traditional marketing approach to tackle this issue and attempt at creating topic clusters which address the niche’s pain points. If you want your content to rank well on the new BERT managed Google, then you will have to make sure that it is aimed at providing value to your target audience.

3. Producing simple to-the-point content is key

A final, but not least important, point to consider is that the BERT algorithm is constantly assessing grammar and language. It does this not only to gauge content quality, but also to derive its own understanding of the content’s intent. For this reason, we must strive to keep our content as simple and self-explanatory as possible. This means that we must stray away from overly complex sentence structure and extravagant wording. This is because such language is difficult for BERT to process and understand as well as difficult to translate to other languages. BERT is trained on BooksCorpus and English Wikipedia articles. This means that it has seen many more examples of simple conversational styles of text as part of its pre-training and fine-tuning. Therefore, it is more likely to recognise and derive meaning from similar styles of writing. For more in-depth tips on how to best make your content more relatable and BERT-friendly here is a great resource.

Many SEO experts have written blog posts on using the BERT algorithm to generate meta descriptions. As we all know meta descriptions are an important factor when it comes to how well a piece of content is going to do on the SEO rankings. The use of BERT to generate meta descriptions is a whole research topic in itself, however if you want to learn more about the process involved in here is a guide to help you with that.

TL;DR

Google’s first open-source update, BERT, has certainly produced shockwaves in the Machine Learning community. Although the results achieved by BERT are state-of-the-art, some of their algorithms need to bme made more accurate and we can expect an update to this batch of algorithms soon. BERT is the algorithm used in training the Google NLP models and aims at gauging a deeper meaning of content and user intent – both in terms of website content and search queries. All in all, it seems that following the release of BERT creators within the SEO industry are going to have to make their content more valuable and human-friendly. Isn’t it ironic that an AI is encouraging us to be more human?

The changes that BERT has brought to the way that Google understands and processes search queries will ensure that content creators will be driven to provide direct answers to queries. This will be a refreshing change from being forced to read through chunks of flowery language and unnecessary jargon only to find answers to our queries hidden at the end of an online article. This update is also going to make the process of SEO more natural as the content that has the most value and provides the user with the most relevant information will rank better than content that is full of buzzwords but provides no unique and valuable content. It seems that BERT’s intentions are for SEO experts to revert to more traditional marketing approaches as part of their SEO strategy.

Another interesting aspect of the BERT update is the way in which it processes language. For this reason we can expect features that are only currently available on Google.com to be extended to other languages that Google supports.

The final take-home message is to put the end-user at the focal point of your SEO strategy. Make your content better for your users and BERT will treat it kindly!

Final Toughts

References

Search Engine Journal | How to Use BERT to Generate Meta Descriptions at Scale

Jean-Christophe-Chouinard | Get BERT Score for SEO

SemRush | Google NLP API Tool: Optimize Your Content to the Next Level

Moz | Better Content Through NLP (Natural Language Processing)

Woorank | How to Write Meta Descriptions Using BERT

Dr Michaela Spiteri BEng, MSc, PhD (AI / Healthcare domain), is a well-published researcher in the field of AI and machine-learning. She is the founder of AI consultancy Analitigo Ltd. Currently working as the lead researcher at Gainchanger.